Hi everyone! ![]()

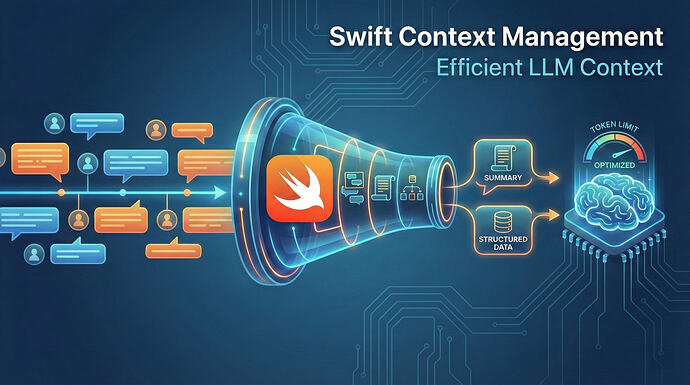

We wanted to share a new package we have been working on called Swift Context Management.

As we build more conversational interfaces in Swift, managing the context window (and staying within token limits) becomes a major challenge. We built this package to help automate the process of pruning, summarizing, and restructuring conversation history so your users don't hit "context length exceeded" errors.

What it does: It provides configurable ContextReductionPolicy strategies to keep your context efficient:

-

Progressive Reduction: Automatically retries requests with more aggressive reduction if limits are hit.

-

Rolling Summaries: Compresses older history into a running summary while keeping recent turns verbatim.

-

Sliding Windows: Simple N-turn retention.

-

Structured State: Extracts key facts/decisions into a dedicated state object.

Usage: It integrates easily with FoundationModels. Here is a quick example of a session that automatically manages its own history:

Swift

import FoundationModels

import SwiftContextManagement

let session = LanguageModelSession()

let contextualSession = ContextualSession(

session: session,

policy: .rollingSummary() // or .slidingWindow(limit: 10), ...

)

// The session automatically manages context and token limits

let response = try await contextualSession.respond(to: "Hello, world!")

I have a lot more policies planned (like semantic recall and topic memory), and I’d love to hear your feedback or ideas for other reduction strategies!

Check it out on GitHub: [swift-context-management]